Choosing the Right Hardware to Match Your Expectations

Warren is architecturally designed to run data center infrastructure that for the very least is able to accommodate the platform along with its resiliency strategies. Under normal circumstances the hardware described below enables Warren to remain fully operational even in an event of multiple simultaneous server hardware failures across all types of server roles.

The basic hardware plan below offers high service availability and is suitable for most compute and storage requirements.

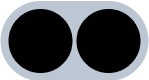

Warren platform is deployed on 3 physical control nodes

Since the software is built exclusively for production grade setups, Warren team has decided to deliver only highly redundant and resilient deployments especially if the initial purpose of the setup is a pilot or proof of concept that should be able to handle your availablity, stress, DRP and data redundancy tests.

Warren Control Plane

| Type | Nodes | Configuration | |

|---|---|---|---|

| Control |  |

3 x Warren Control Nodes

for resilient IaaS solution |

|

Recommended reading on how to be smart about choosing the right hardware:

Aligning Hardware to Your IaaS Business Plan

Delivering a turnkey IaaS solution involves much more than for instance vanilla OpenStack or CloudStack can provide. The following breakdown should give an high level overview of some of the components running on the 3 Warren Control Nodes.

Some of the open-source software hosted with the control plane

- Tungsten Fabric – Software Defined Networking.

- Ceph – Software Defined Storage.

- Prometheus, Loki & Grafana – Monitoring managed workloads remotely and locally.

- Apache Mesos & Marathon – Compute cluster manager that handles workloads in a distributed environment through dynamic resource sharing and isolation.

- Kong – Gateway for presenting endpoints of most Warren components from a single root API.

- Consul – Service discovery & key-value store

Warren platform components / microservices

- Base operator – Virtual machine and compute instance management.

- User Interface – Each UI user gets an individual UI Docker container of JupyterLab for isolation.

- User Resource Interface – User management, token management and managed services management.

- Storage Manager – Blocks storage management, object storage management.

- Charging Engine – Collecting user resource usage (currently on hour precision)

- Network Manager – VPC, FIP and Load Balancer management.

- Payment – Invoices, payment gateways, pricing management.

Compute Nodes

| Type | Nodes | Configuration | |

|---|---|---|---|

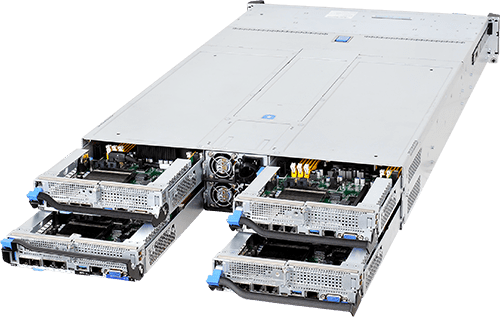

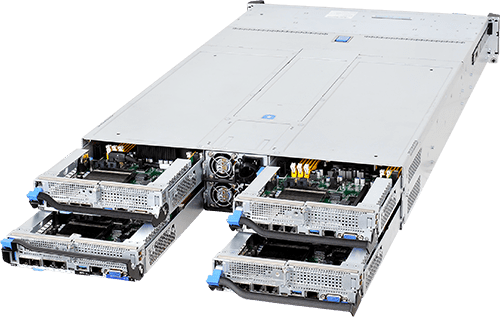

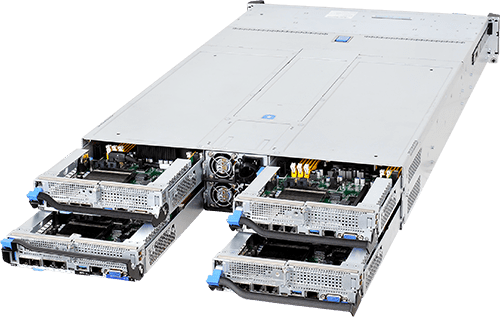

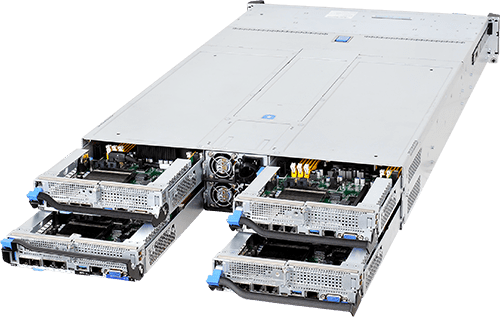

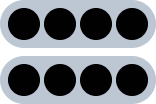

| Compute |  |

4 x Compute nodes

used to run customer workloads (minimum of 3 nodes gives you live migrations in case of hardware failure) |

|

Ceph Distributed Storage Cluster

Block storage and S3 object storage cluster

| Type | Nodes | Configuration | |

|---|---|---|---|

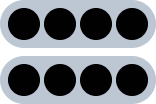

| Storage |  |

5 x Ceph nodes

for distributed block storage immune to most hardware failures |

|

Block storage ONLY with no S3 object storage

| Type | Nodes | Configuration | |

|---|---|---|---|

| Storage |  |

3 x Ceph nodes

for distributed block storage immune to most hardware failures |

|

Please refer to https://croit.io/software/docs/master/production-setup for planning out your Ceph storage cluster.

Network Equipment

| Type | Nodes | Configuration | |

|---|---|---|---|

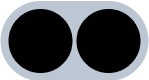

| Network |  |

2 x TOR & 1 x management switch

for redundant high speed network |

|

More information about Warren cloud platform control plane, network topology and technologies used can be found from knowledge base: https://warrenio.atlassian.net/wiki/spaces/WARP/pages/300875801/Warren+Hardware+Recommendation

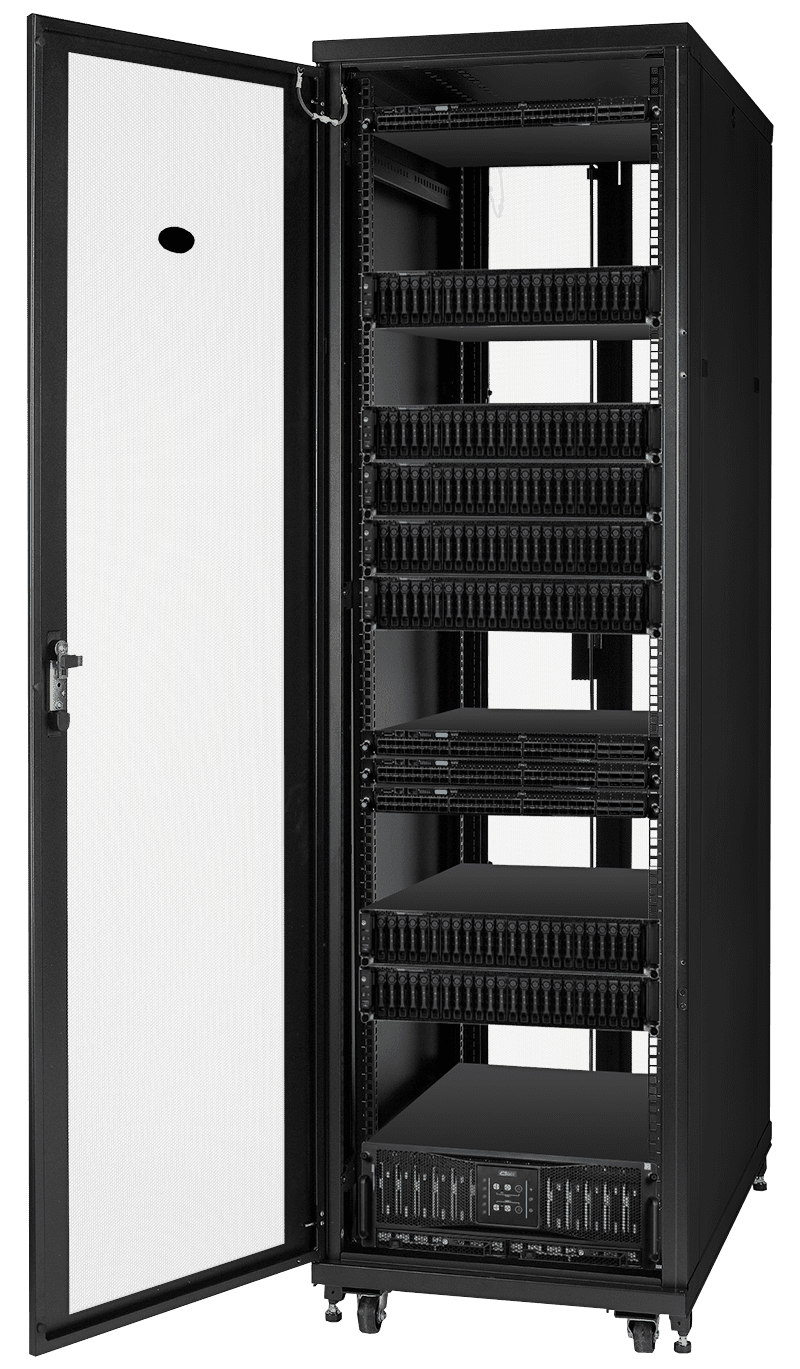

Rack planning

|

Configuration |

BASE

12 nodes

STANDALONE RACK |

GROWTH

32 nodes

STANDALONE RACK |

SCALE

31 nodes

3 RACK MINIMUM |

|---|---|---|---|---|

| Aggregation node |  |

|||

| Control nodes |  |

|

|

|

| Compute nodes |  |

|

|

|

| Network nodes |  |

|

|

|

| Block storage nodes |  |

|

|

|

| Object storage nodes (optional) |

|

|

||

| * Every other rack |  |

|||